Local Used Items Analysis with Python and Tableau

"One Man's Trash is Another Man's Treasure!"Background

I’m fascinated with the second-hand market. People exchange items in their communities more than ever now. Rachel Botsman’s argument in favor of Collaborative Consumption inspires me. I’ve always been partly amazed and partly disturbed by how much we consume produced goods around us.

For better or worse, the internet has hyperconnected us. Boundaries between online and offline life are dwindling in many respects. One of the benefits of this is the ability to buy and sell used goods from local strangers. Not too long ago, most people perceived this as crazy & too risky, but we’ve come a long way.

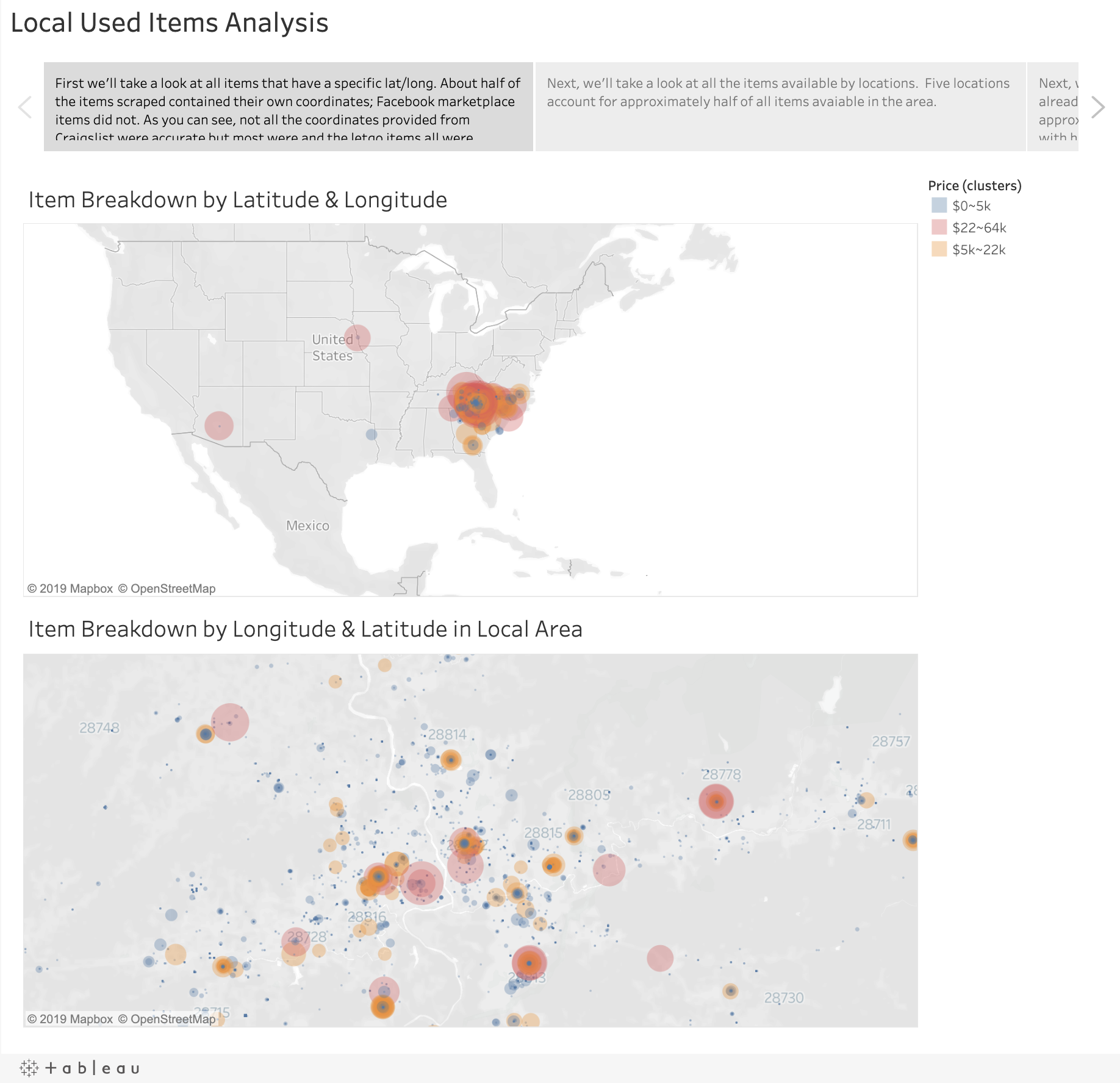

My hope is that this process will continue to improve and norms of hyper-consumption will recalibrate into a more balanced state. This is my exploration of local used items where I live in North Carolina.

Process

I scraped data from three sources: Craigslist.org, Facebook Marketplace, & Letgo app. I then saved the wrangled data into a MongoDB database because it works quite nicely with JSON formatted data & Python Dictionaries. I then performed Exploratory Data Analysis using Tableau and Pandas library in Python. Finally, I experimented with the MonkeyLearn API to execute a text summarizer model as well as a price extractor model.

You can read the original blog post on the New York Data Science Academy Blog here.

You can find the EDA & Data Viz with Tableau portion here.

You can find the scripts & further details about the project on Github here.

Over 10k Items Wrangled & Analyzed

Web Scraping

Utilized a combination of BeautifulSoup, Scrapy, & Selenium to wrangle data from multiple sources.

Database Storage

Utilized MongoDB to store wrangled data.

Exploratory Data Anaysis

Utilized Python Pandas library & Tableau to tidy, segment, & geocode data on items.